With the AI infrastructure race intensifying and GPU supply still tight, startups that can help teams extract more performance from existing hardware are attracting serious attention. Enter Tensormesh, which has just emerged from stealth with $4.5 million in seed funding to do exactly that.

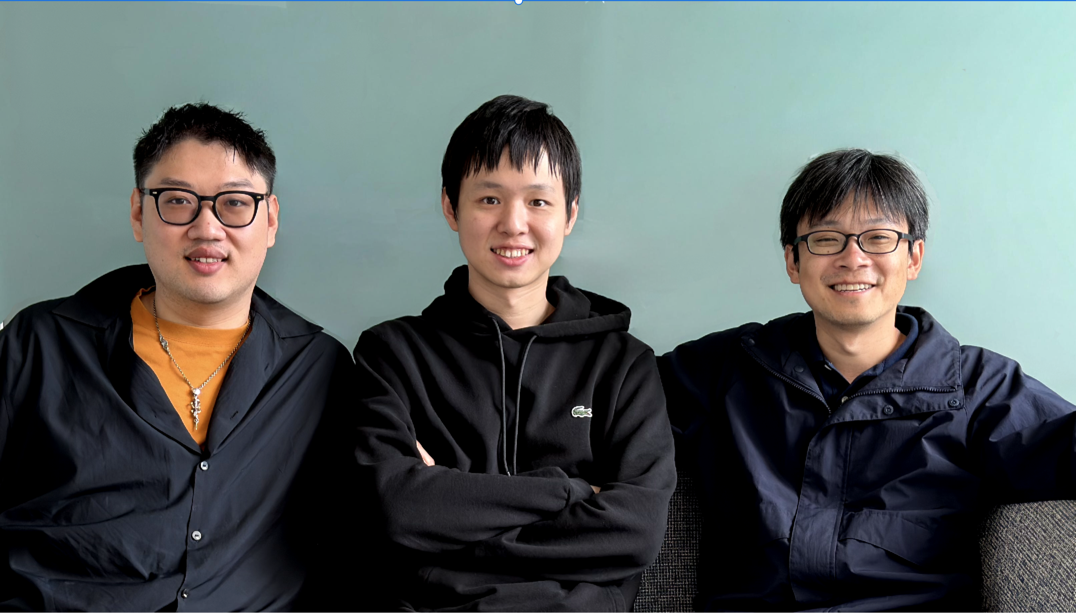

The round was led by Laude Ventures, with participation from angel investors including Michael Franklin, a legendary database researcher and pioneer in distributed systems.

Tensormesh’s mission: help AI companies dramatically cut inference costs by optimizing how servers reuse memory during model execution — an area often overlooked in the scramble for more GPUs.

The Tech: Turning Memory into a Multiplier

At the core of Tensormesh’s approach is a commercial-grade version of LMCache, an open-source project built by co-founder Yihua Cheng. LMCache has already gained traction among AI developers and enterprise teams, with early integrations from Google and Nvidia, who use it to improve inference throughput and lower compute waste.

When applied correctly, LMCache can reduce inference costs by up to 10x — a figure that’s rapidly turning heads in the compute-hungry AI ecosystem.

The company’s proprietary engine optimizes how key-value caches (KV caches) are stored and reused. Normally, these memory structures — which help large language models process sequential data — are discarded after each query.

“It’s like having a brilliant analyst who reads everything but forgets it all after every question,” explained Junchen Jiang, co-founder and CEO of Tensormesh. “We make sure they remember, so the next question gets answered faster and cheaper.”

Instead of throwing away these cached insights, Tensormesh’s system retains and intelligently redeploys them across new queries, effectively teaching models to remember their past work. The result is higher throughput, lower latency, and more efficient GPU utilization — without changing the model itself.

Why It Matters

This caching efficiency is particularly transformative for chatbots and agentic AI systems, where conversations and task logs grow continuously. By persisting and recalling relevant cached data, Tensormesh enables smoother, faster responses and lowers the computational overhead that typically bogs down real-time inference.

The alternative — building such systems in-house — can take teams months and dozens of engineers, Jiang said.

“Keeping the KV cache in secondary storage and reusing it efficiently without performance loss is an incredibly hard problem,” Jiang noted. “We’ve seen companies spend hundreds of thousands trying to do it. Our solution works out of the box.”

From Open Source to Enterprise

Tensormesh is now translating its open-source momentum into a commercial offering aimed at AI infrastructure providers, enterprise model teams, and LLM platform operators. The company plans to use the $4.5M round to expand its engineering team, deepen its integrations, and release managed versions of LMCache optimized for high-scale cloud environments.

As the AI sector grapples with skyrocketing GPU costs and sustainability concerns, Tensormesh sees an opening to become a foundational layer for inference optimization — helping teams do more with less hardware.

“AI performance isn’t just about bigger models or more GPUs,” said Jiang. “It’s about using what you already have more intelligently — and that’s where we come in.”

About Tensormesh

Tensormesh is a San Francisco–based AI infrastructure company optimizing inference efficiency for large language models and agentic systems. Founded by Junchen Jiang and Yihua Cheng, Tensormesh builds tools that maximize GPU utilization through advanced key-value caching and memory reuse. Its open-source project, LMCache, is already used by major players like Google and Nvidia, helping reduce inference costs by up to tenfold. Backed by Laude Ventures and Michael Franklin, Tensormesh is redefining how AI workloads scale in the age of generative intelligence.